Hello!

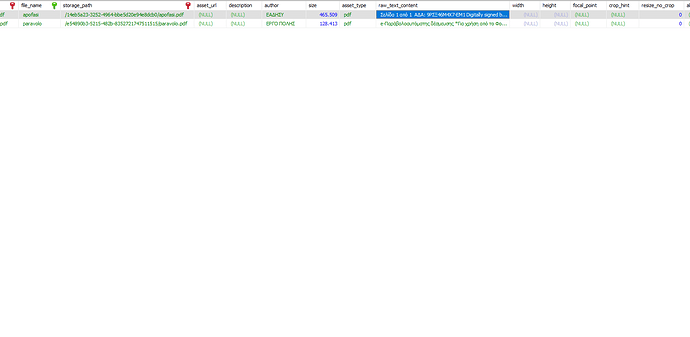

I try to upload a pdf or docx document in order to get its metadata, but unfortunately i get no data regarding raw_text_content (psys_asset). Other fields work ok.

java version 11.0.26

Is anything that could be done as it would help me a lot?

Regards

Hello again!

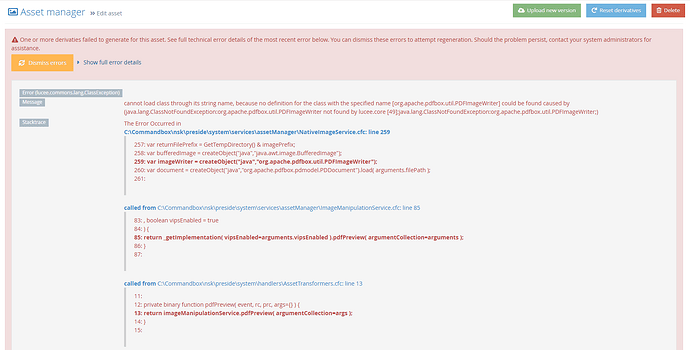

When i try to upload as sysadmin i get the following error

if i try to upload via another cms user (non-sysadmin) i get no error. The raw_text_content remains null in both cases. I hope that it might help!

Regards again

The tikka extension is not maintained and has not been touched in a very long time. I believe @m.hnat did some work with it and might have managed to get something working but other than that, it is not something actively used or maintained.

Hello!

I think that the problem lies in the correct syntax of MANILEST.MF file in order to become a valid osgi bundle and afterwards drop it successfully to lucee-server/bundles according to the following

My MANIFEST.MF syntax (updated) is the following

Manifest-Version: 1.0

Archiver-Version: Plexus Archiver

Created-By: Apache Maven 3.6.0

Built-By: tim

Build-Jdk: 11.0.26

Specification-Title: Apache Tika application

Specification-Version: 2.4.0

Specification-Vendor: The Apache Software Foundation

Implementation-Title: Apache Tika application

Implementation-Version: 2.4.0

Implementation-Vendor-Id: org.apache.tika

Implementation-Vendor: The Apache Software Foundation

Automatic-Module-Name: org.apache.tika.app

Main-Class: org.apache.tika.cli.TikaCLI

Bundle-Name: Apache Tika App Bundle

Bundle-SymbolicName: apache-tika-app-bundle

Bundle-Description: Apache Tika App jar converted to an OSGi bundle

Bundle-ManifestVersion: 2

Bundle-Version: 2.4.0

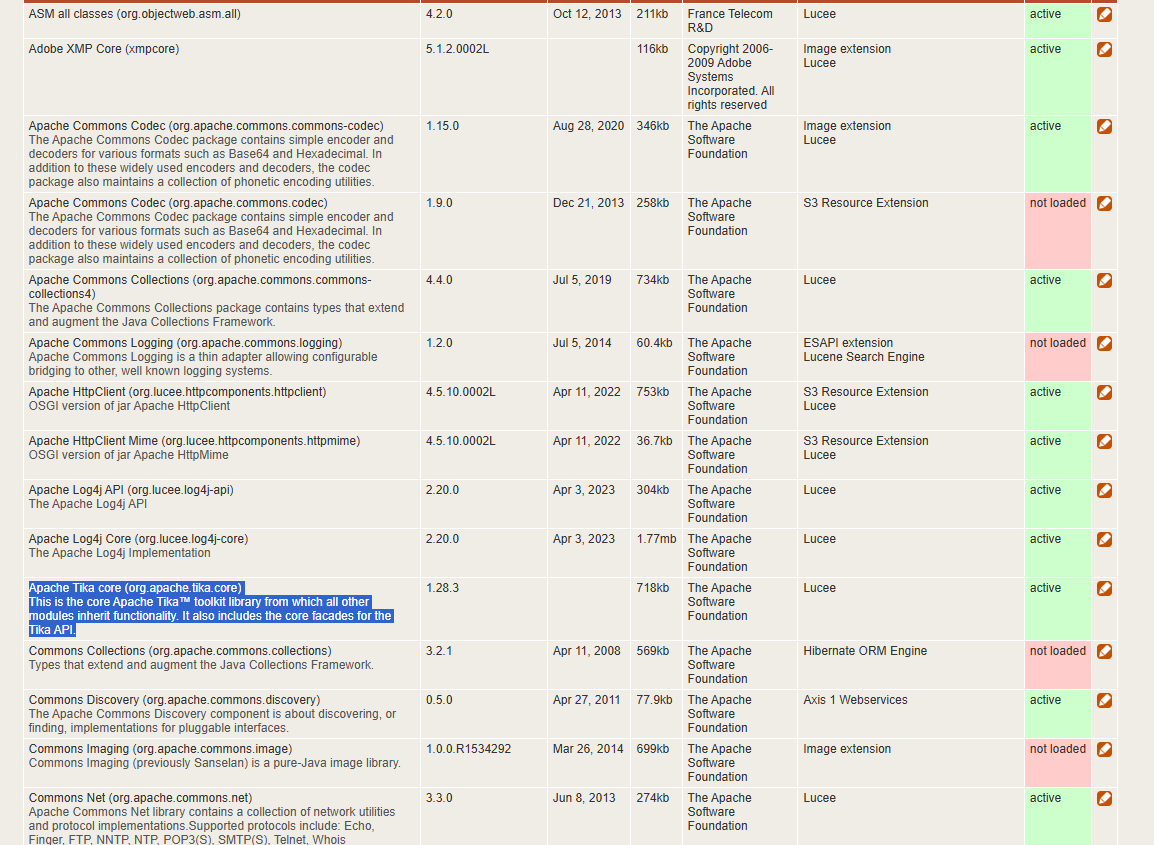

and of course i get the following screenshot via lucee admin info>Bundles (actually no apache tika 2.4.0 at all).

Any idea would help me a lot!

Regards again

Honestly, I’d start again with it. Take a look at the service logic that is happening and look to see if indeed it is still compatible with how Preside is doing things.

It hasn’t been updated in 9 or 10 years.

Start with getting some working simple code with tika or some other lib to produce the ability to call tika logic from your cf code. Then tie it in to Preside logic.

Looking at the code, I’d suggest the only real viable way to do this would be to create an interceptor (core Coldbox concept) that listens for postInsertObjectData() and adds the metadata that way. Looks like we don’t have any better interception points right now to be able to achieve this cleanly.

Your interceptor would look something like:

component extends="coldbox.system.Interceptor" {

property name="myCustomMetaService" inject="delayedInjector:myCustomMetaService";

public void function configure() {}

public function postInsertObjectData( event, interceptData ) {

if ( arguments.interceptData.objectName == "asset" || arguments.interceptData.objectName == "asset_version" ) {

myCustomMetaService.readAssetMetaWithTika( sourceObject=arguments.interceptData.objectName, recordId=arguments.interceptData.newId );

}

}

}

Do NOT have your service logic extend the core services like the tika extension has it.

Thanks a lot for your interest!

Definitely this extension is very crucial to my job (law data scientist) and thankfully to your assistance it works again at least for me.

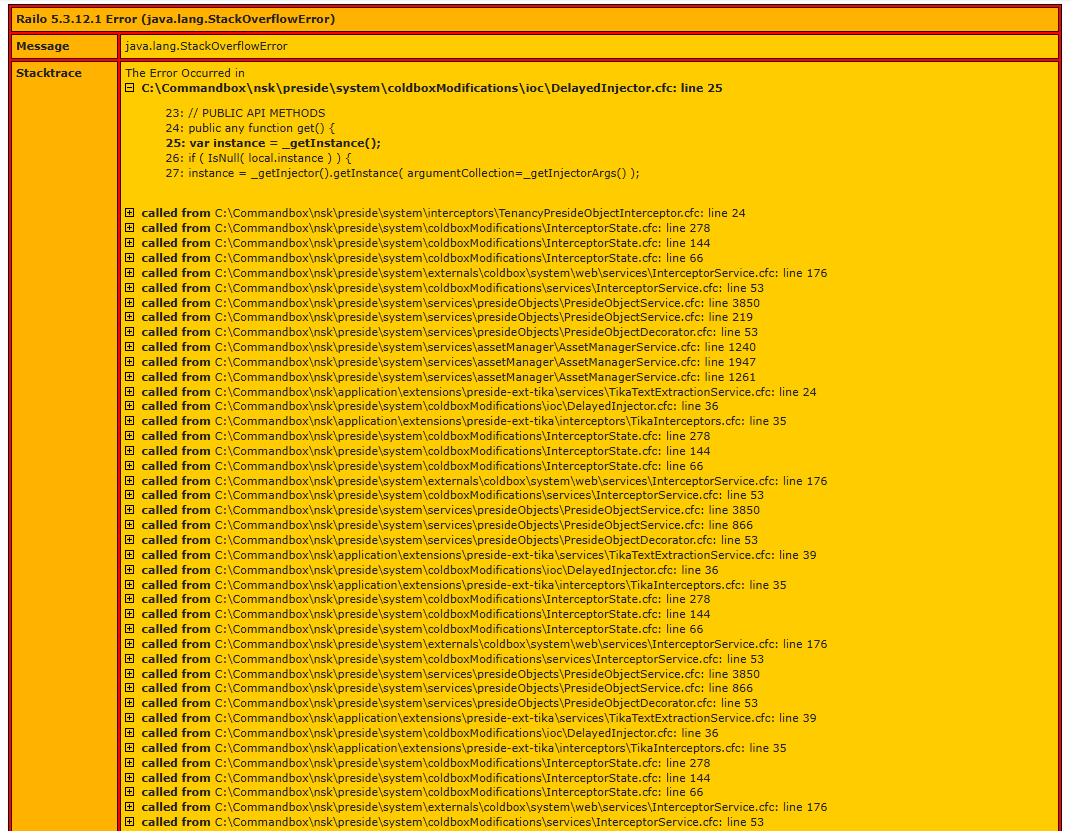

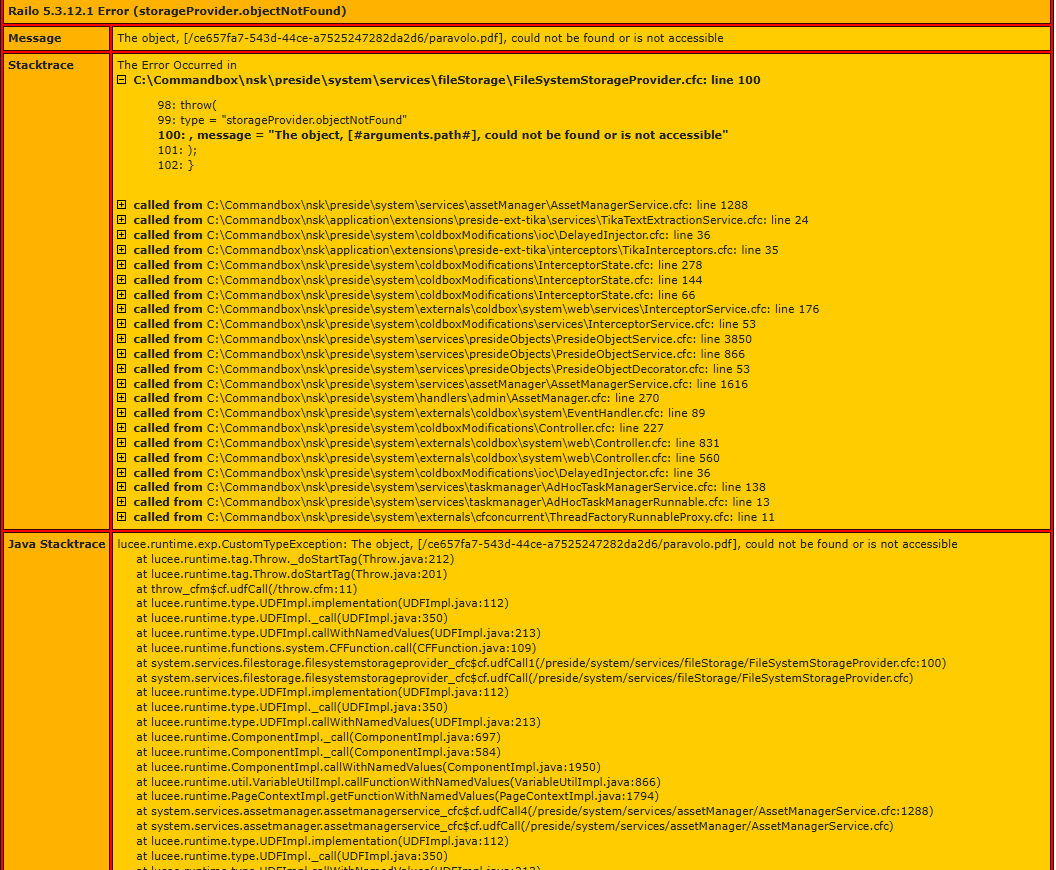

Unfortunately i get some log errors during file upload. Any idea what is wrong?

additionally when i try to delete the previous uploaded file from assetManager i get the following

Regards again

Stackoverflow = is some kind of infinite loop. Your logic in the interceptor is eventually leading to the interceptor logic firing again so you’ll need to trace that through to see why.

Hard to know what might be causing issues without seeing the code. Could you post your tikainterceptors code?

I have made slight changes to the original tika extension

component extends="coldbox.system.Interceptor" {

property name="adhocTaskmanagerService" inject="delayedInjector:adhocTaskmanagerService";

property name="tikaTextExtractionService" inject="delayedInjector:tikaTextExtractionService";

property name="systemConfigurationService" inject="delayedInjector:systemConfigurationService";

public void function configure() {}

public void function postInsertObjectData( event, interceptData ) {

/*

if ( StructKeyExists( arguments.interceptData, "skipTika" ) ) {

return;

}

*/

var objectname = arguments.interceptData.objectname ?: "";

if ( objectName == "asset" or objectName == "asset_version" ) {

tikaTextExtractionService.processAsset(arguments.interceptData.newId ?: "");

// _processAsset( arguments.interceptData.newId ?: "", objectName );

}

}

public void function postUpdateObjectData( event, interceptData ) {

/*

if ( StructKeyExists( arguments.interceptData, "skipTika" ) ) {

return;

}

*/

var objectname = arguments.interceptData.objectname ?: "";

if ( objectName == "asset" or objectName == "asset_version" && Len( arguments.interceptData.changedData.storage_path ?: "" ) ) {

tikaTextExtractionService.processAsset(arguments.interceptData.id ?: "");

//_processAsset( arguments.interceptData.id ?: "", objectName );

}

}

// helpers

private void function _processAsset( recordId, objectName ){

if ( Len( Trim( arguments.recordId ) ) && _readMetaEnabled() && tikaTextExtractionService.fileTypeIsSupported( argumentCollection=arguments ) ) {

adhocTaskmanagerService.createTask(

event = "tika.processAsset"

, args = { recordId=arguments.recordId, objectName=arguments.objectName }

, runNow = true

, discardOnComplete = true

);

}

}

/**

* Perhaps this should be revisited. This setting is about metadata rather than text.

* We could have our own system config to enable tika extraction and perhaps to choose

* the filetypes we want to support it for...

*

*/

private boolean function _readMetaEnabled() {

var setting = systemConfigurationService.getSetting( "asset-manager", "retrieve_metadata" );

return IsBoolean( setting ) && setting;

}

}

Regards again

The issue here I think is that you’re using onUpdateObjectData(). The “delete” action will lead to a record update as it is only a soft deletion and the asset ismoved to the trash folder.

When an asset’s file is changed, a new asset_version record is created. This is why I think you only need to look at postInsertObjectData but look at both asset + asset_version records.

Then no need/desire to try to run this on record update as lots of updates to the asset record can be triggered by various logic.

Thanks for the help again!

I am not sure for the asset_version what should i place ?

public void function postInsertObjectData( event, interceptData ) {

/*

if ( StructKeyExists( arguments.interceptData, "skipTika" ) ) {

return;

}

*/

var objectname = arguments.interceptData.objectname ?: "";

if ( objectName == "asset") {

tikaTextExtractionService.processAsset(arguments.interceptData.newId ?: "");

} else if (objectName == "asset_version") {

// tikaTextExtractionService.processAssetVersion(arguments.interceptData.id ?: "");

// or as i am not sure

// tikaTextExtractionService.processAssetVersion(arguments.interceptData.newId ?: "");

}

}

Regards again

is it ok?

If it works  but yes, something along these lines is what I’d expect for it to be working.

but yes, something along these lines is what I’d expect for it to be working.

I like the idea of it using adhoc task to do in background too - nice touch.

I will try it in the background if that is the problem to work properly. Additionally I think that you should offer online courses for preside, definitely it would help a lot.

Regards